Analyzing Sucuri’s 2017 Hacked Website Trend Report

The Sucuri team just released their first annual security report that looks at telemetry from hacked websites – Hacked Website Report 2017. It uses a representative sample of infected websites from the Sucuri customer base to better understand end-user behavior and bad-actor tactics.

It specifically focuses on 34,371 infected websites, aggregating data from two distinct groups – Remediation and Research Teams. These are the teams that work side-by-side with the owners of infected websites on a daily basis, and are also the same team members that generate a lot of the research shared on the Sucuri Blog.

In this post I will expand on the analysis shared, and add my own observations.

CMS Analysis

The report shows an increase in WordPress, but it’s not indicative of WordPress being more or less secure than other platforms or of core being the vector exploited. It is, however, indicative of WordPress being more popular; it also speaks to Sucuri’s popularity in the WordPress ecosystem.

What’s frankly more interesting to me, is what you find in the Outdated CMS Analysis section.

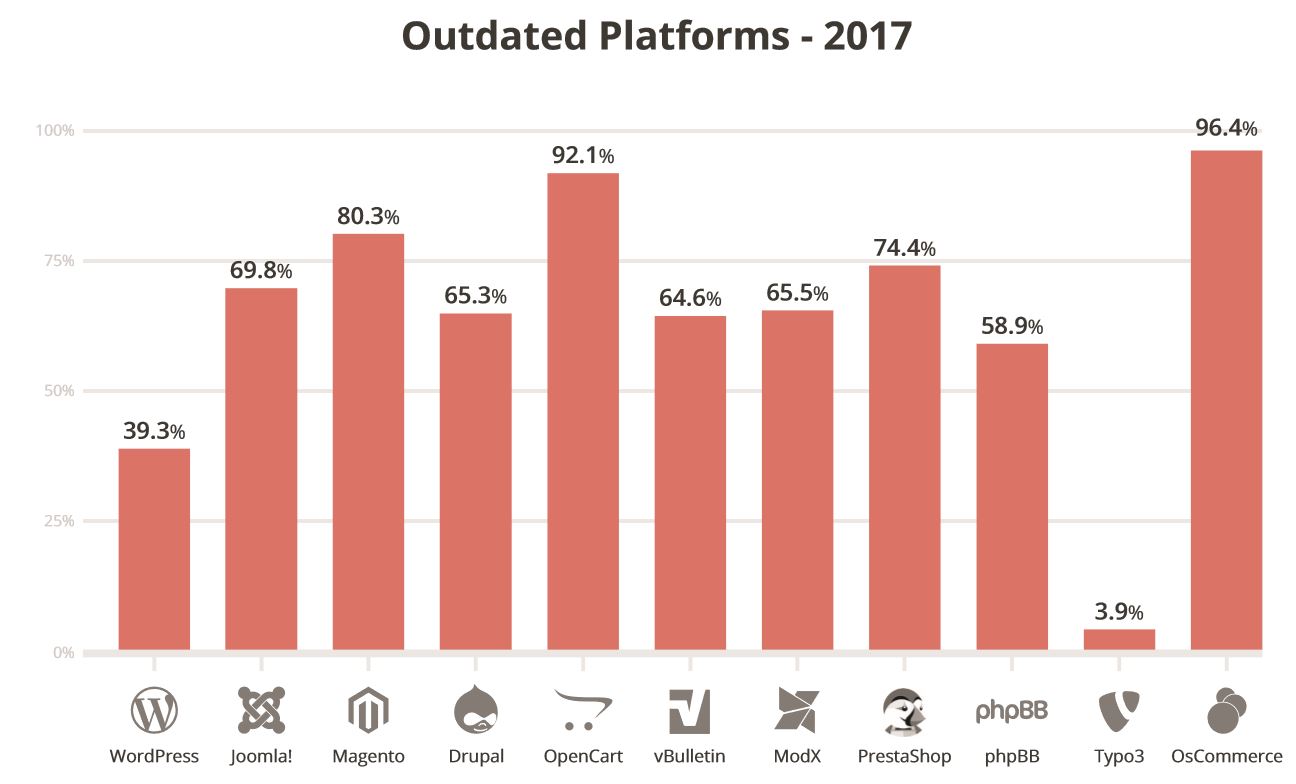

The outdated platforms charts highlights two things:

- WordPress Version – In the 2016 report, 61% of the WordPress sites had been out of date at the point of infection. In 2017, 39.3% are out of date at the point of infection. That’s a pretty sharp decline in out of date instances of WordPress. I attribute this directly to the actions by the WordPress core team to implement more secure by default functions (e.g., auto-updates).

- E-commerce Platforms – What’s concerning is to see the current state of e-commerce open-source CMS applications. Take a look at OpenCart (92%), PrestaShop (74%), OsCommerce (96%) and Magento (80.3%). These are the core applications users are leveraging to perform online commerce transactions. In many ways this speaks directly to the complexities associated with these platforms to upgrade, which when coupled with human behavior, is a recipe for disaster. Unfortunately, you could argue, they more than others, should have easier mechanisms to facilitate maintenance (they are managing sensitive data – credits cards).

Remember that the real threat, as it pertains to any CMS, is their extensible components (e.g., module, plugins, extensions, etc…).

The common theme across all CMS exploits can be boiled down to the following:

- Poor maintenance behavior by website owners;

- Failure to stay current across the entire stack;

- Exploitation of extensible components (plugins, extensions, etc..);

- Security Configuration by website owners;

- Poor security knowledge and resources;

- Broken authentication;

Blacklist Analysis

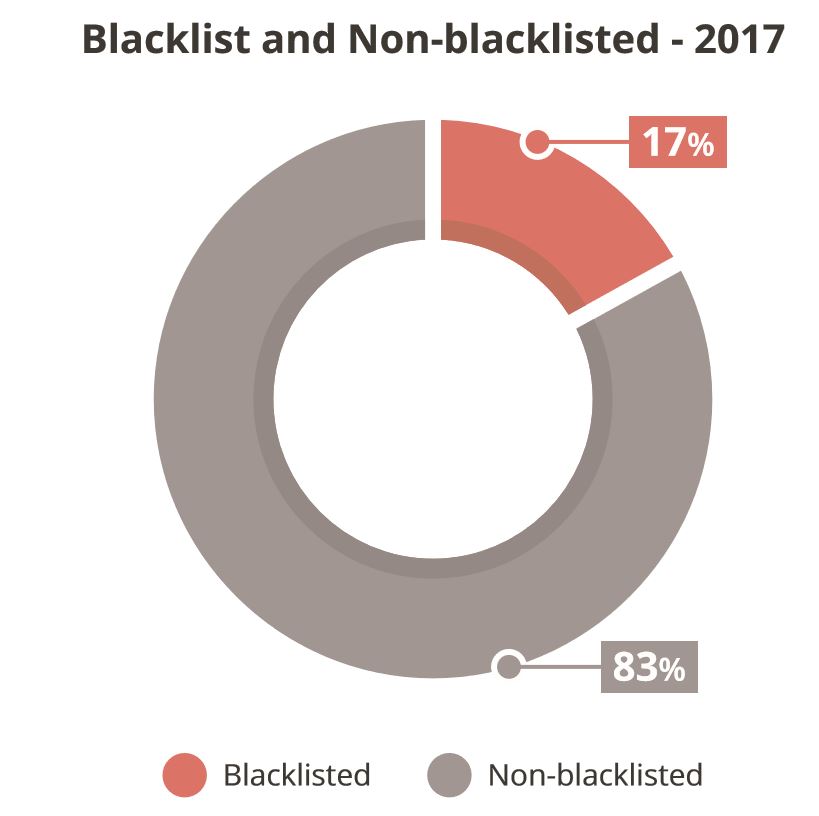

The report also highlights the distribution of blacklisted and non-blacklisted sites from the same 37k sample. For me this illustrates a) the different indicators of compromise and b) the effectiveness and reach of blacklist authorities.

Most interesting of this section is that 17% of the 37k were blacklisted. There are many ways to interpret this, but for me it illustrates the importance of continuous monitoring. It shows that with a 17% detection rate, depending solely on authorities like Google, Norton, and McAfee is not enough.

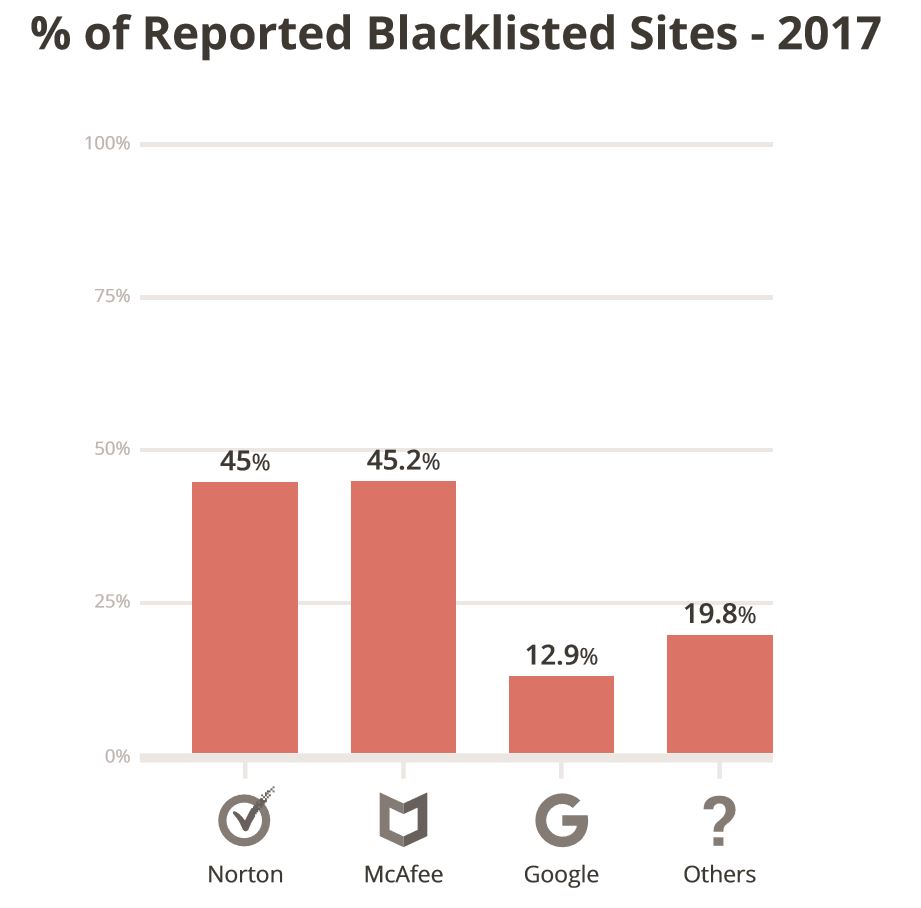

This becomes even more evident when you look at the detection effectiveness across the different authorities. Of the 17%, Google only made up 12.9%; while Norton and McAfee both maintained 45% detection share.

Not all blacklist authorities are the same. Google is the most prominent because it’s the one that most browsers leverage, most commonly Chrome. The Sucuri team put together a great guide to understand the different Google warnings. When it detects an issue it presents the users with a red splash page – stopping a visitor in their tracks.

Other entities however are effective for a different reason, when Norton and McAfee flag you this implies anyone using their desktop AV client will be prevented from visiting the site or at least notified of an issue. These entities also share their API’s with a number of different services and products, great example is the use of McAfee in Facebook to parse malicious domains.

Blacklist authorities don’t work the same. This means being blacklisted by one doesn’t necessarily mean the other will, and being removed form one doesn’t mean the other will. This introduces a lot of stress and frustration with website owners. The impacts though always seem to have the same detrimental impact.

I still highly recommend registering with each organization, but they can be stressful when it’s unclear why something is flagged.

Malware Families

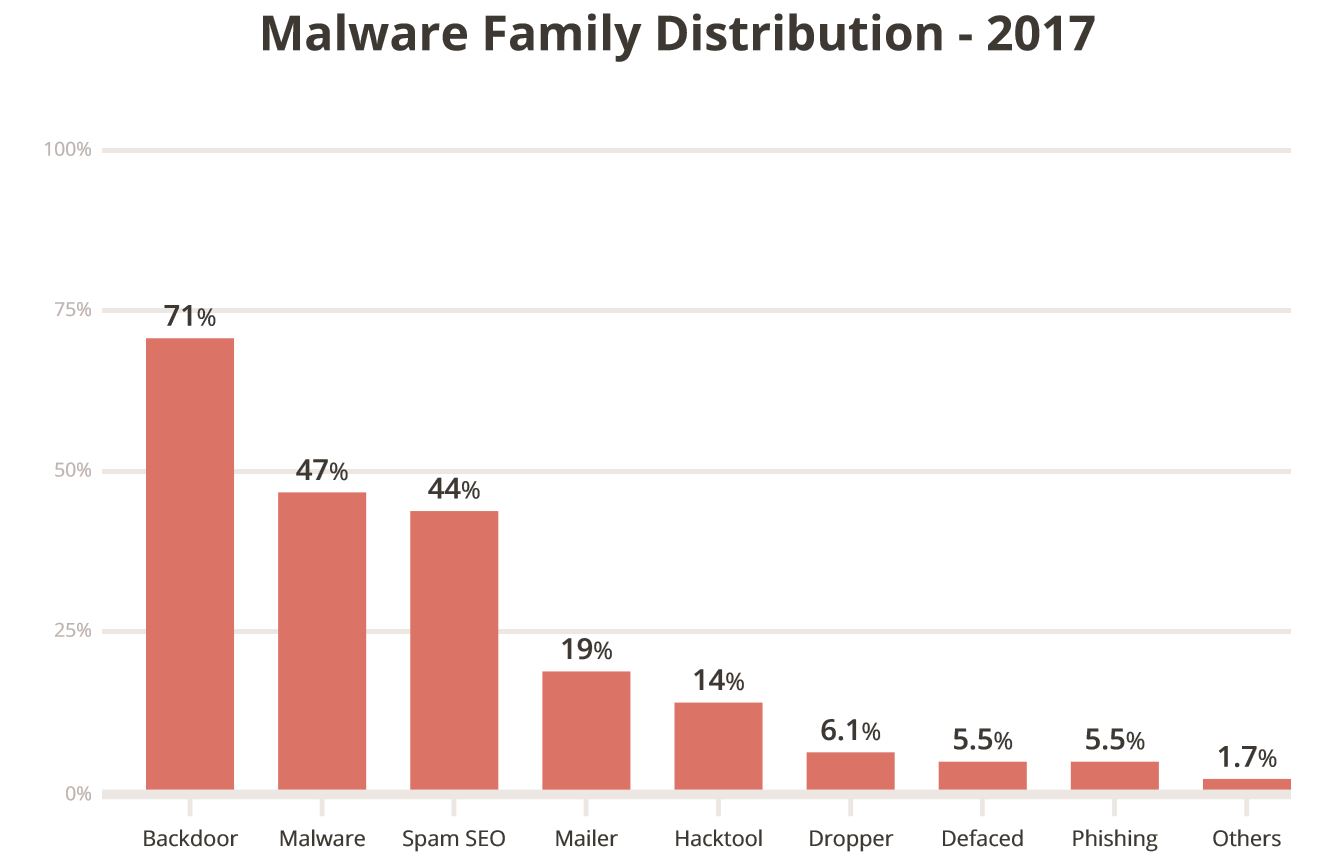

I am particularly interested in this section. From this section I’m able to extrapolate an actors desired actions on objective (i.e., motives).

When reading the data, note that you can have sites with multiple malware families. An attacker will often deploy a series of actions on objective. Backdoors are a great example.

Backdoors are payloads that are designed to give attackers continued access to an environment, bypassing any existing access controls. They were were found in 71% of the infected sites analyzed. You can expect backdoors to be one of the first things an attacker will deploy to ensure that even if their actions are found, they can retain access continue to use the site for nefarious actions. This is also one of the leading causes of reinfections, and one of the commonly missed payloads.

The continued rise of SEO Spam is of special interest. This is often the result of a Search Engine Poisoning (SEP) attack in which an attacker attempts to abuse the ranking of your site for something they are interested in. Years ago this would be almost synonymous with the Pharma Hack, but these days you see attackers leveraging this in a number of other industries (e.g., Fashion, Loans, etc..). You can expect this in any industry where impression based affiliate marketing is at play.

This section goes on to describe the specific tactics they employ to deploy things like SEO spam.

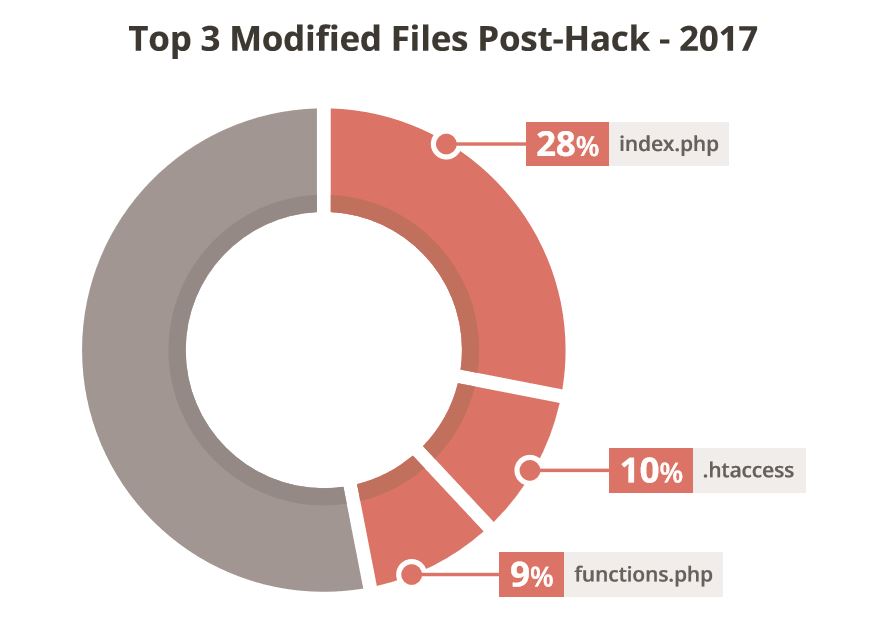

For example, the Top 3 modified files were the index.php (28%), .htaccess (10%), and functions.php (9%) file. The report outlines how each of the files are being leveraged, specifically for what malware families. SEO spam is deployed specifically in the .htaccess and functions files, while function files are mostly leveraged for malware distribution (e.g., drive-by-downloads), backdoors. Index files are more multi-purpose, and are used for server scripts, malware distribution and a few other variations.

There is a lot of goodness in this section and highlights of employing appropriate permissions on files and indirectly highlights where to focus your file integrity monitoring systems.

There are other TTP’s I’d like to find a way to better measure, things like lateral movement in an environment a strong contributor to reinfections and supply chain poisoning (SCP) attacks. A write up for another day.

I encourage you to read through the report and share the parts you find most insightful. Your feedback will help Sucuri continue to improve their analysis and reporting.